An Introduction to AI Agents and The OpenAI Assistants

Beginner-friendly Introduction to AI Agents and OpenAI Assistants. This post explains what AI Agents are and provides examples of building agents with the OpenAI API and OpenAI Assistants API

Themba Mahlangu

AI Agents have hit mainstream media in the past few months, for good reason. They represent a shift from how people use large language models to perform tasks.

AI Agents can perform complex tasks using tools. These agents and tools can be chained together to perform tasks involving multiple steps.

OpenAI recently updated its Assistants API. The API allows users to build complex custom agents without worrying about managing run storage and state.

What is an AI Agent

An AI agent is a large language model (LLM) that can perform actions using tools.

A basic workflow looks something like the diagram below.

The language model receives input from the user or another agent.

The LLM determines whether it needs to use a tool to perform an action.

If a tool is used, the output is returned to the LLM.

Finally, the LLM returns a response to the user.

from openai import OpenAI

GPT_MODEL = "gpt-3.5-turbo-0613"

class OpenAIAgent:

def __init__(self):

self.client = OpenAI()

def chat_completion_request(self, messages, tools=None, tool_choice=None, model=GPT_MODEL):

try:

response = self.client.chat.completions.create(

model=model,

messages=messages,

tools=tools,

tool_choice=tool_choice,

)

return response

except Exception as e:

print("Unable to generate ChatCompletion response")

print(f"Exception: {e}")

return e

def chat(self, messages, tools=None, tool_choice=None):

response = self.chat_completion_request(messages, tools=tools, tool_choice=tool_choice)

return response.choices[0].message

def pretty_print_conversation(self, messages):

role_to_color = {

"system": "red",

"user": "green",

"assistant": "blue",

"function": "magenta",

}

for message in messages:

if message["role"] == "system":

print(f"system: {message['content']}\n")

elif message["role"] == "user":

print(f"user: {message['content']}\n")

elif message["role"] == "assistant" and message.get("function_call"):

print(f"assistant: {message['function_call']}\n")

elif message["role"] == "assistant" and not message.get("function_call"):

print(f"assistant: {message['content']}\n")

elif message["role"] == "function":

print(f"function ({message['name']}): {message['content']}\n")Notes - OpenAIAgent Class

Initialization (__init__): When you create a new OpenAIAgent, it sets up a connection with OpenAI's service so it can talk to OpenAI's models.

Chat Completion Request (chat_completion_request): This function is used to ask OpenAI for a response based on the conversation so far. You tell it what has been said in the conversation, and it asks OpenAI to continue the conversation. If there's an issue with getting a response from OpenAI, it will let you know.

Chat (chat): This is a simpler way to talk to OpenAI. You give it the messages in the conversation, and it uses the chat_completion_request to get a response, and then it gives you back what OpenAI said.

Pretty Print Conversation (pretty_print_conversation): This function goes through the messages in a conversation and prints them out in a way that's easy to read. It changes the color of the text based on who's talking—the system, the user, or the assistant.

History: Notice that this class is not able to keep track of the chat history. Let’s do that now.

OpenAI Assistants

The OpenAI Assistants API also implements agents. However, the agents have built-in capabilities and a well-structured API.

Assistants can call OpenAI’s models with specific instructions.

Can access tools like code interpreter.

Can access persistent Threads or (built-in message history).

Assistants can access files in several formats.

Two key advantages of OpenAI Assistants over other agent implementations are:

Clear documentation of the key components of assistants.

Well-defined Run lifecycle management. This makes it easy to build rich user interfaces.

Key Components

| Object | Description |

|---|---|

| Assistant | An agent that uses a tool |

| Thread | A conversation session between an Assistant and a user or other agents. Threads store Messages |

| Message | A message created by an Assistant or a user. Messages can include text and other files. Images are not yet supported. |

| Run | A Run is a collection of steps that the assistant and the system take to achieve a task. |

| Run Step | A detailed list of steps the Assistant took as part of a Run. An Assistant can call tools or create Messages during its run. |

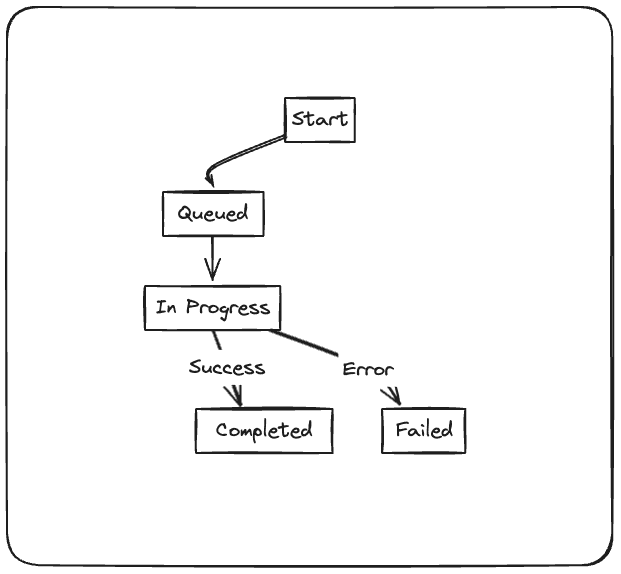

Run lifecycle (Steps taken to complete a task)

Here is a simplified view of the steps taken to complete a run.

A run is first queued and will in most cases proceed to in progress.

When in progress the run can move to the Requires action state where it can use external tools.

Tool outputs are passed back to the agent for processing.

Building an Assistant

Instead of building the assistant using the API, we can use the marvin.ai library from Prefect to simplify this process.

Install Marvin and add your API key.

pip install marvinLet’s create a simple assistant that can search Google and return the results.

from marvin.beta.assistants import Assistant, Thread

from marvin.beta.assistants.formatting import pprint_messages

# write a function for the assistant to use

def search(query):

# run a search here.

return result

def run_assistant(user_message):

ai = Assistant(tools=[search])

# create a thread - you could pass an ID to resume a conversation

thread = Thread()

thread.add("User message")

# run the thread again to generate a new response

thread.run(ai)

messages = thread.get_messages()

pprint_messages(messages)

# usage

run_assistant("Fetch results for cars in mongolia")Notes - This demonstrates how to build a simple assistant that can search Google for results based on user input.

Search Function (search): A placeholder function intended to execute a search query. In a complete example, this would contain the logic to perform the search and return results.

Assistant Setup (run_assistant):

Initializes an assistant configured with the search function as a tool.

Sets up a conversation thread, which is a series of back-and-forth messages between the user and the assistant. This is stored and managed by OpenAI.

Runs the assistant within the thread, using the user's message ("Fetch results for cars in mongolia") as input.

Output: Retrieves and prints messages from the conversation, displaying the search query and any responses generated by the assistant.

In less than 22 lines we have created an assistant capable of writing code and performing searches.

Useful links

Marvin - Lightweight easy to use and modify.

Cookbook - OpenAI cookbook for tool calling.

How assistants work - OpenAI docs on assistants.

Any Questions?

https://calendly.com/advantch-themba/refinement-meeting

Cheers,

Themba